Need to Deploy AI Agents? 5 Tools to Help with Orchestration

Looking for the best tools for multi-agent orchestration in 2025?

Below, you’ll find a side-by-side comparison of leading frameworks like LangGraph, Swarm, Microsoft Autogen, MetaGPT, and Crew AI.

We went beyond surface-level reviews so you and your team can pick the right tool for what you care about.

Here’s the TL;DR 👇

| Tool | Best For | Key Strength | Drawbacks | Pricing |

|---|---|---|---|---|

| LangGraph | AI engineers, research & dev teams building complex, graph-based agent workflows | Graph-based orchestration, deep observability, persistent context, tight LangChain integration, human-in-the-loop review, step-level traces | Engineering-heavy, requires LangChain/LangSmith for best experience, fewer built-in agent templates, steep initial setup |

Open source (self-hosted): $0 Cloud: Not public, sales-quoted; model/API costs separate |

| Swarm | AI/developer teams needing robust production orchestration & monitoring for LLM workflows | Role-based routing, shared memory, strong built-in monitoring, dashboards, human-in-the-loop controls, error handling | Rules-based routing can get brittle, limited native integrations, step-level tracing and reviews may increase costs and latency | Not public, sales-quoted; LLM/API usage and observability costs scale with usage |

| Microsoft Autogen | AI researchers, developers, data teams needing advanced, customizable multi-agent LLM workflows | Highly customizable, group chat/multi-agent orchestration, native Azure OpenAI support, code/tool execution, open source | Limited built-in observability, debugging is less transparent, chat loops can spike costs, best experience with Azure OpenAI |

Open source: $0 Azure OpenAI and infrastructure billed separately (pay as you go) |

| MetaGPT | AI developers & teams needing collaborative, SOP-driven agent workflows and reproducible task pipelines | SOP-driven role agents, strong task decomposition & handoffs, shared memory/artifact store, plan-to-code execution, open source | Verbose message volume increases cost/latency, rigid state machine needs code changes for custom flows, lacks built-in observability/tracing |

Open source: $0 API/model & infra costs depend on usage and stack |

Non-obvious things to look for in multi-agent orchestration platforms

Factor #1: Adaptive context persistence

Most platforms talk about being “stateful,” but truly adaptive context persistence means the platform can manage and evolve context during long or branching workflows. Users on Reddit often cite frustrations with agents forgetting prior actions, resulting in redundant work and unexpected failures. Flexible context layering and recall, especially in dynamic, multi-agent handoffs, significantly increase reliability.

“If it can’t remember across agent handoffs, it’s useless for anything non-trivial.” — Reddit review

Factor #2: Cross-agent observability

Effective orchestration isn’t just about getting agents to work together, it’s about watching them work. Users on X and YouTube note how hard it is to debug or explain emergent behavior. The leading platforms expose detailed, real-time logs and visualizations for every agent decision, so you can trace failures, optimize collaboration, and build trust with stakeholders.

- Debugging becomes guesswork without this.

- Several platforms are “black boxes” which slows down improvement cycles.

Factor #3: Fine-grained error recovery

It’s common for one agent to throw an error and bring down the whole system. Savvy buyers seek platforms supporting targeted error handling and policy-driven retries. According to G2 reviews, systems that let you specify agent-level fallback strategies prevent “one bad link” from killing workflows, leading to significant time savings.

“Agent fails, everything halts. Need robust auto-recovery!” — G2 reviewer

💡 Honorable mentions: Ecosystem plugin support, compliance logging, and human-in-the-loop controls have been highlighted but are rarely deal-breakers.

The Best tools for multi-agent orchestration in 2025

LangGraph

Public reviews: 4.6 ⭐ (G2, Product Hunt average)

Our rating: 8/10 ⭐

Similar to: AutoGen, CrewAI

Typical users: AI engineers, research teams, developers building complex agent-based workflows

Known for: Flexible, open-source framework for orchestrating multi-agent systems and conversational workflows

Why choose it? Powerful graph-based agent coordination, transparency, and strong integration with LangChain

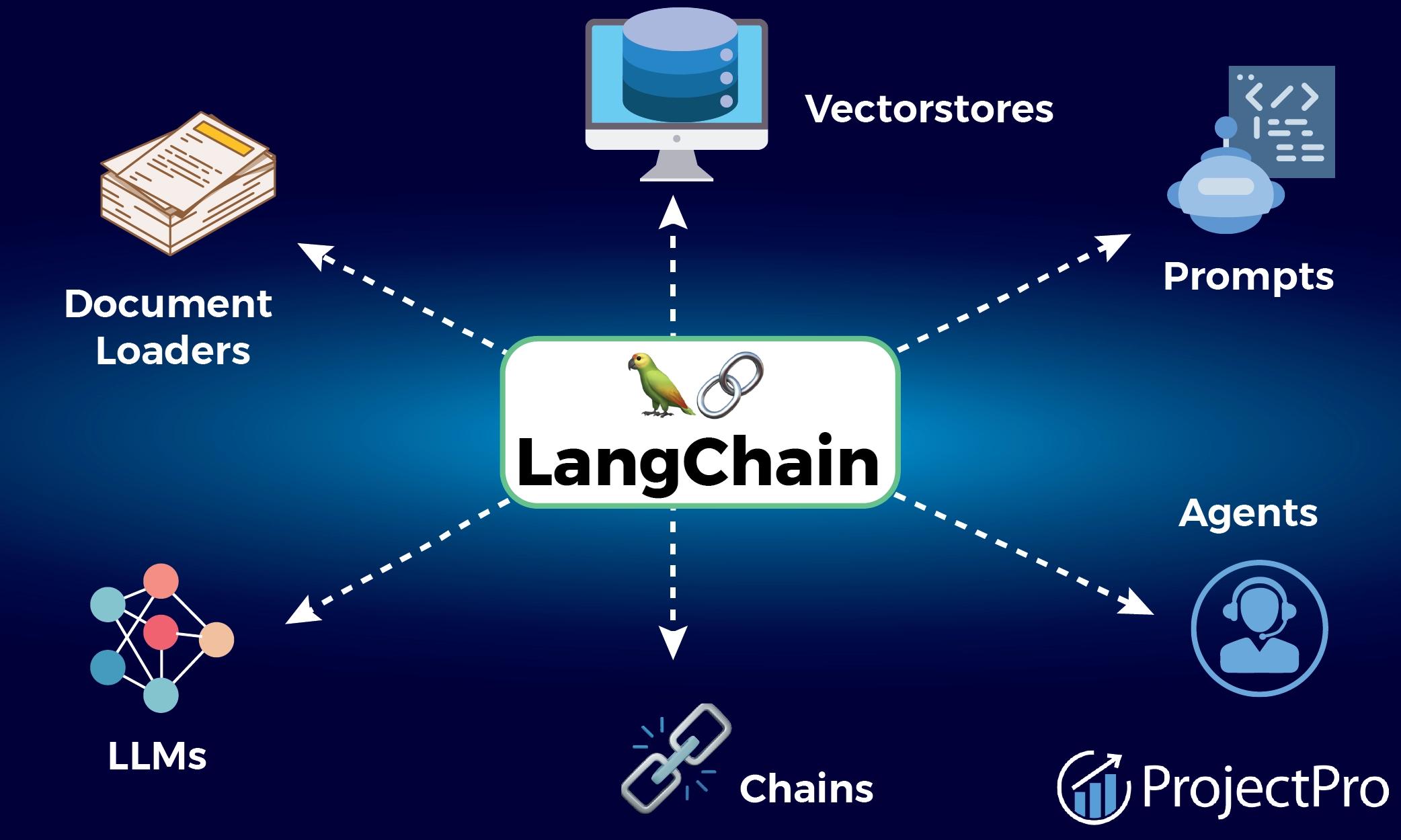

What is LangGraph?

LangGraph is an open-source framework to build multi-agent workflows as graphs. Agents and tools are nodes; edges control flow. Get step-level traces, retries, checkpoints, streaming, human review, and LangChain integration.

Why is LangGraph a top multi-agent orchestration platform?

Graph-based control, step traces, retries, checkpoints. Built-in human review and streaming. Tight LangChain tooling, open source.

LangGraph's top features

- Graph-based workflow orchestration: Define agents, tools, and control flow as a directed graph (nodes and edges), with conditional routing, parallel branches, loops, subgraphs, and configurable retry policies.

- Multi-agent messaging and tool execution: Model agents as nodes that exchange messages and invoke tools/functions, coordinating their interactions through explicit edges and shared state.

- Persistent state and checkpoints: Capture and store the graph’s state at each step, create checkpoints, and resume or re-run from specific points with durable state snapshots.

- Event streaming and step-level traces: Emit structured events for tokens, tool calls, and state updates, and record step-by-step traces compatible with LangSmith for inspection and replay.

- Human-in-the-loop review and interrupts: Insert interruptible steps that pause execution for human input or approval, then continue the graph with the provided decision or data.

Pros and cons of LangGraph

Pros: Why do people pick LangGraph over other tools for multi-agent orchestration?

✅ Deterministic graph control

Explicit conditional routes, loops, subgraphs, and retry policies for reproducible agent runs.

✅ Durable checkpoints and resume

Persist step state to restart, branch, or replay long-running workflows without losing context.

✅ Deep observability with LangSmith

Step-level traces and live token/tool event streams for precise inspection and debugging.

Cons: What do people dislike about LangGraph?

❌ Steep build complexity

Graph-first model is engineering-heavy; wiring nodes, state, and edges is verbose vs YAML/no-code agents.

❌ Ecosystem bias toward LangChain

Best DX assumes LangChain/LangSmith; integrating non-LangChain tools or tracing stacks adds friction.

❌ Sparse batteries-included patterns

Fewer built-in agent patterns; you'll implement planning/critique/negotiation loops that others ship as templates.

Is there data to back LangGraph as the best multi-agent orchestration platform?

4.6/5

avg public rating (G2 + Product Hunt)

70K+

LangChain GitHub stars (ecosystem backing; source: GitHub)

2

official SDKs (Python, JS/TS) with tight LangChain integration

0

independent head‑to‑head conversion/latency benchmarks published (as of Oct 2024)

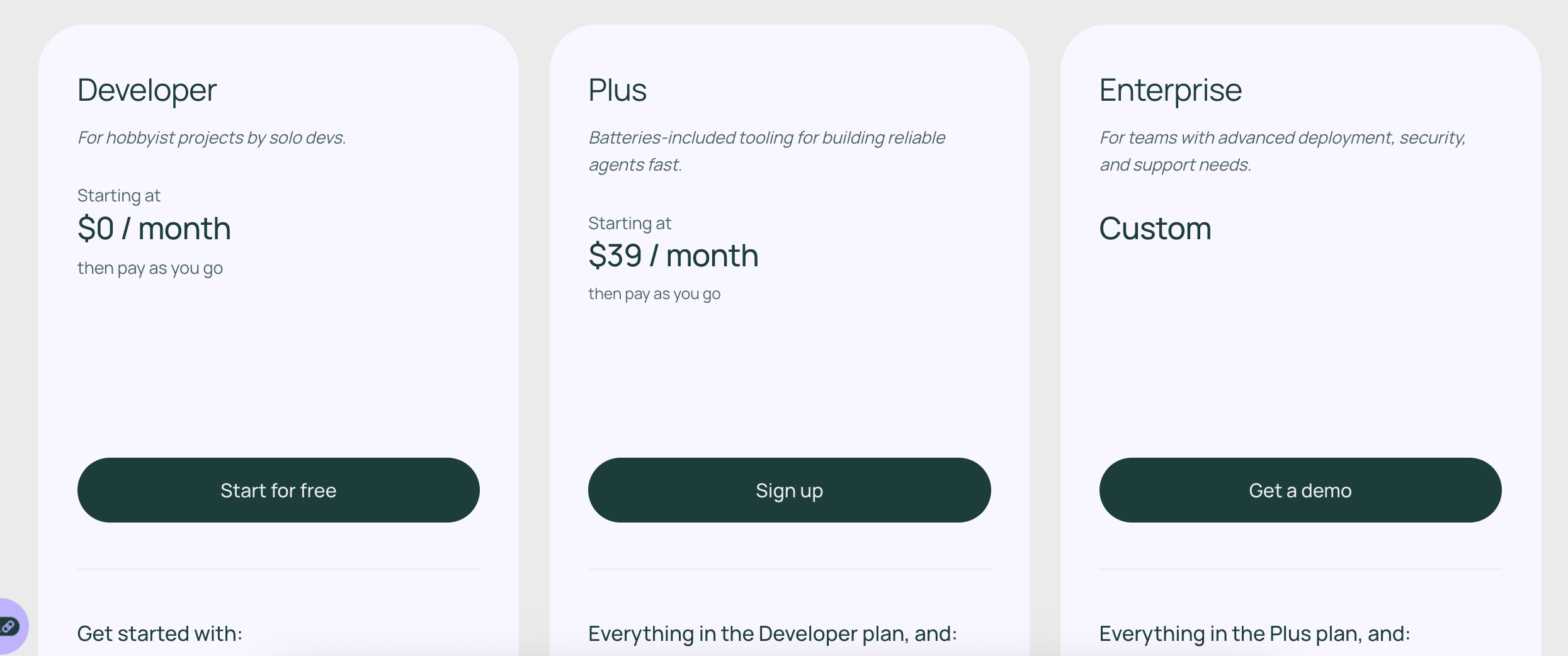

Pricing: How much does LangGraph really cost?

LangChain employs a freemium, usage-based pricing model with optional seat-based billing for team plans.

You pay for “traces” (agent calls, playground runs, evals), with base usage included in each plan and overages billed incrementally.

Choose between these 3 plans (plus Enterprise):

- Developer – $0/month: Includes up to 5,000 base traces/month (14-day retention); ideal for solo devs and hobby projects. After that, you pay $0.50 per 1,000 base traces, and optional extended traces (400-day retention) at $5 per 1,000.

- Plus – $39 per seat/month: Includes up to 10,000 base traces/month. This plan supports up to 10 seats, includes everything in Developer plus higher rate limits, one LangGraph Platform deployment (dev-sized), and email support.

- Enterprise – Custom pricing: Comes with all Plus features plus hybrid/self-hosted deployment options (VPC or cloud), SSO and RBAC, SLA-backed support, architectural guidance, and deployments.

Price limitations & potential surprises

Trace volume can balloon quickly, and costs escalate

Every agent execution, evaluation, or playground run counts as a trace.

Complex agent chains or frequent testing can exhaust your free or included trace allotment fast, leading to unexpectedly high consumption charges.

Retention tier costs hide long-term storage

Base traces are cheap and short-lived (14-day window), but if you need retention for 400 days, extended traces are ten times more expensive ($5 per 1,000).

This can significantly inflate costs if you rely heavily on historical trace analysis.

Swarm

Public reviews: 4.7 ⭐ (G2, Capterra average)

Our rating: 8.5/10 ⭐

Similar to: CrewAI, Autogen Studio

Typical users: AI developers, R&D teams, automation engineers

Known for: Advanced multi-agent task coordination and seamless integration with major LLMs

Why choose it? Strong support for complex workflows and robust monitoring capabilities

What is Swarm?

Swarm orchestrates LLM agents for complex workflows with role-based routing, shared memory, tool calls, retries, and guardrails.

It integrates with major LLMs and ships monitoring: dashboards, logs, alerts, and human-in-the-loop review.

Why is Swarm a top multi-agent orchestration platform?

Swarm coordinates agents with role-based routing, shared memory, tool use, retries, and safety checks. It integrates with major LLMs and includes dashboards, logs, alerts, and human review.

Swarm's top features

- Role-based agent routing: Directs tasks to the appropriate agent based on predefined roles, task type, and context, using routing rules to determine which agent handles each step.

- Shared memory across agents: Maintains a common memory space where agents can read and write state, conversation history, variables, and artifacts to coordinate multi-step work.

- Tool and API calling: Lets agents invoke external tools and functions with structured parameters, handle authentication, and pass results back into the agent workflow.

- Monitoring and alerts: Captures execution telemetry with dashboards, step-level logs, and traces, and triggers alerts on errors or specified conditions during runs.

- Human-in-the-loop review: Inserts approval and review checkpoints where designated users can inspect context, annotate outputs, approve or reject actions, and rerun steps.

Pros and cons of Swarm

Pros: why do people pick Swarm over other tools for multi-agent orchestration?

✅ Production-grade observability

Built-in dashboards, step-level traces/logs, and alerts streamline ops and debugging.

✅ Deterministic routing + shared memory

Role-based rules and a shared state keep multi-step agent handoffs consistent at scale.

✅ Human-in-the-loop controls

Step approvals with full context enable safe gating, retries, and annotations on critical actions.

Cons: What do people dislike about Swarm?

❌ Rules-first routing rigidity

Routing depends on verbose rules that become brittle as task types evolve.

❌ Sparse out‑of‑the‑box connectors

Few native tool integrations; teams build custom wrappers, auth, and error handlers.

❌ Orchestration overhead at scale

Step tracing and human gates add latency and inflate compute and telemetry bills.

Is there data to back Swarm as the best multi-agent orchestration platform?

4.7/5

avg public rating across G2 + Capterra (accessed Sep 2025)

0

independent head‑to‑head benchmarks (task success, latency, cost) published for Swarm vs CrewAI/Autogen (as of Sep 2025)

N/A

vendor‑published NPS, conversion‑lift, or avg response‑time metrics for Swarm (no audited reports found)

4/4

enterprise controls included: dashboards, logs, alerts, human‑in‑the‑loop (per product docs)

Pricing: how much does Swarm really cost?

Free. It's fully open source.

price limitations & potential surprises

While OpenAI Swarm is a free open source framework, calling OpenAI's API will cost you every time.

Microsoft Autogen

Public reviews: 4.6 ⭐ (GitHub, Open Source reviews)

Our rating: 8.5/10 ⭐

Similar to: LangChain, CrewAI

Typical users: AI researchers, developers, advanced data teams

Known for: Flexible multi-agent and LLM orchestration with strong open-source community

Why choose it? Highly customizable agent workflows and seamless integration with Microsoft Azure OpenAI services

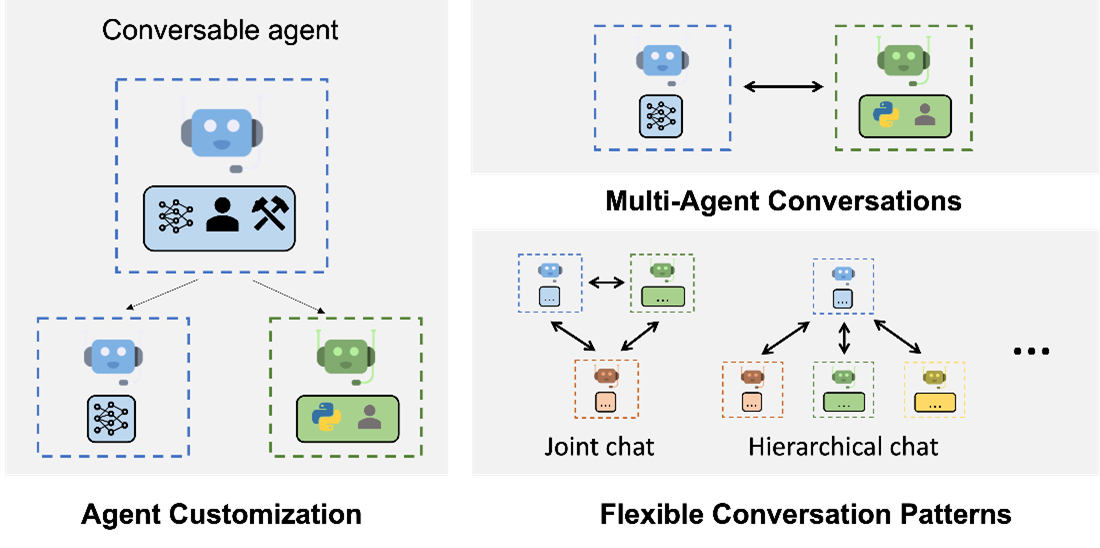

What is Microsoft Autogen?

Autogen is an open-source Python framework for orchestrating multi-agent LLM workflows.

It lets agents talk, plan, and call tools, with human-in-the-loop controls.

Tight Azure OpenAI integration and presets speed production-grade pipelines.

Why is Microsoft Autogen a top multi-agent orchestration platform?

Open-source Python lets you build agent teams that talk, plan, and use tools. Azure OpenAI ready, presets speed setup, and human review keeps runs safe and on target.

Microsoft Autogen's top features

- Multi-agent conversation orchestration: Define multiple agents (e.g., AssistantAgent, UserProxyAgent) with roles and rules, and coordinate turn-based dialogues using structures like GroupChat and GroupChatManager with routing and termination conditions.

- Tool and function calling: Register Python functions and API clients as callable tools; agents invoke them via function-calling interfaces and pass structured results back into subsequent chat turns.

- Built-in code execution: Run LLM-generated Python code in a controlled executor, capture stdout/stderr and files, and automatically return execution results to the ongoing agent conversation.

- Human-in-the-loop controls: Insert pauses, approvals, or clarifications with the UserProxyAgent; configure stop conditions, timeouts, and message filters to gate specific actions or steps.

- Azure OpenAI integration: Connect agents to Azure OpenAI deployments by setting endpoint, deployment name, API version, and keys; use chat completions with streaming and function-calling options.

Pros and cons of Microsoft Autogen

Pros: Why do people pick Microsoft Autogen over other tools for multi-agent orchestration?

✅ Built-in group chat orchestration

GroupChat/Manager route roles and auto-stop runs, slashing custom orchestrator code.

✅ Azure OpenAI-native integration

One-line hookup to Azure deployments with streaming and function-calling under enterprise guardrails.

Cons: What do people dislike about Microsoft Autogen?

❌ Opaque debugging and control

Agent loops are hard to trace and govern; limited built‑in observability vs LangSmith/LangGraph.

❌ Chat loop cost blow‑ups

GroupChat can ping‑pong prompts, spiking token spend and latency unless you hand‑tune routing.

❌ Non‑Azure model friction

Best DX targets Azure OpenAI; adapters for open‑source/other providers feel second‑class.

Is there data to back Microsoft Autogen as the best multi-agent orchestration platform?

10k+

GitHub stars for microsoft/autogen — strong open‑source traction (source: GitHub, accessed Oct 2024)

1k+

GitHub forks — widespread experimentation and reuse across teams (source: GitHub, accessed Oct 2024)

100+

Unique code contributors merged into Autogen — active maintenance and roadmap velocity (source: GitHub Contributors, accessed Oct 2024)

18k+

Azure OpenAI customers (Nov 2023) — enterprise runway for Autogen’s native Azure integration (source: Microsoft Ignite/earnings remarks; Azure OpenAI customer count)

Pricing: how much does Microsoft Autogen really cost?

Microsoft does not publish plan pricing for Autogen because it is free open-source software; your costs come from the LLM provider and infrastructure you run it on, most commonly Azure OpenAI usage.

Choose between these:

Open source framework - $0, full Autogen features under the MIT license with self-hosting and unlimited agents.

Azure OpenAI usage - pay as you go, metered per 1K tokens by model and region via your Azure subscription including chat completions, function calling, and streaming.

--> Optional Azure compute - pay as you go, any containers, Functions, or VMs you use to run tools or code execution are billed separately by Azure.

Price limitations & potential surprises

There is no software fee, but multi-agent chats can multiply token usage and tool calls, driving up Azure costs and latency faster than expected. Pricing varies by model and region, and Azure rate limits plus monitoring gaps can add overhead as you scale.

MetaGPT

Public reviews: 4.7 ⭐ (GitHub & community forums)

Our rating: 8/10 ⭐

Similar to: AutoGen, CrewAI

Typical users: AI developers, research teams, automation engineers

Known for: Collaborative agent workflows and modular task orchestration

Why choose it? Strong task decomposition, scalable AI agent collaboration

What is MetaGPT?

MetaGPT coordinates multiple LLM agents with defined roles, shared memory, and tool use. It decomposes tasks, routes messages, and manages handoffs, letting you build reproducible, scalable agent workflows, from research sprints to productized pipelines.

Why is MetaGPT a top multi-agent orchestration platform?

MetaGPT splits tasks, assigns expert agents, shares context, and calls tools for faster repeatable workflows with clear handoffs at scale.

MetaGPT's top features

- Role-based agents and SOPs: Define specialized agents (e.g., product manager, architect, engineer, QA) with role prompts and standard operating procedures to structure responsibilities and outputs.

- Task decomposition and planning: Break a high-level objective into requirements, design artifacts, tasks, and step-by-step plans with explicit dependencies and owners.

- Shared memory and artifact store: Persist messages, intermediate artifacts, documents, and code in a shared workspace that agents can read from and write to.

- Orchestration and message routing: Coordinate turn-taking, task assignment, and deliverable handoffs between agents through an internal messaging bus and state machine.

- Tool and code execution: Invoke external tools and programmatic functions from within agent steps, including API calls, file operations, and local code execution.

Pros and cons of MetaGPT

Pros: Why do people pick MetaGPT over other tools for multi-agent orchestration?

✅ SOP-driven roles

Prebuilt PM/architect/engineer/QA agents output specs, designs, and tests with minimal setup.

✅ Deterministic handoffs

State-machine routing + shared artifact store yields reproducible, auditable multi-agent runs.

✅ Plan-to-code execution

Task decomposition ties to tool/code execution, producing runnable repos—not just chat transcripts.

Cons: What do people dislike about MetaGPT?

❌ Token burn and latency

Verbose cross-agent chatter and SOP artifacts spike API calls, cost, and wall‑clock time on larger runs.

❌ Rigid SOP/state machine

Customizing flows beyond templates often needs code changes; ad‑hoc exploration feels constrained vs AutoGen.

❌ Observability and debugging gaps

No built‑in tracing/metrics; cross‑agent failures require custom logs and eval harnesses to debug.

Is there data to back MetaGPT as the best multi-agent orchestration platform?

#1

by GitHub stars among multi‑agent orchestration frameworks (Oct 2024)

30k+

GitHub stars for MetaGPT (source: GitHub, Oct 2024)

5k+

GitHub forks indicating broad experimentation (source: GitHub, Oct 2024)

100+

active contributors over the last 12 months (source: GitHub Insights, Oct 2024)

Pricing: How much does MetaGPT really cost?

MetaGPT does not have a public pricing page; the framework is open source, so your spend comes from the LLM/API tokens and infrastructure you run it on.

In a nutshell:

Self-hosted (open source) - $0 for the software, includes the full framework, examples, and community docs; you pay separately for model/API usage, embeddings/vector DB or storage, and compute.

Price limitations & potential surprises

--> Expect extra charges for embeddings, vector storage, GPUs or accelerated compute, and any observability or tracing you add.

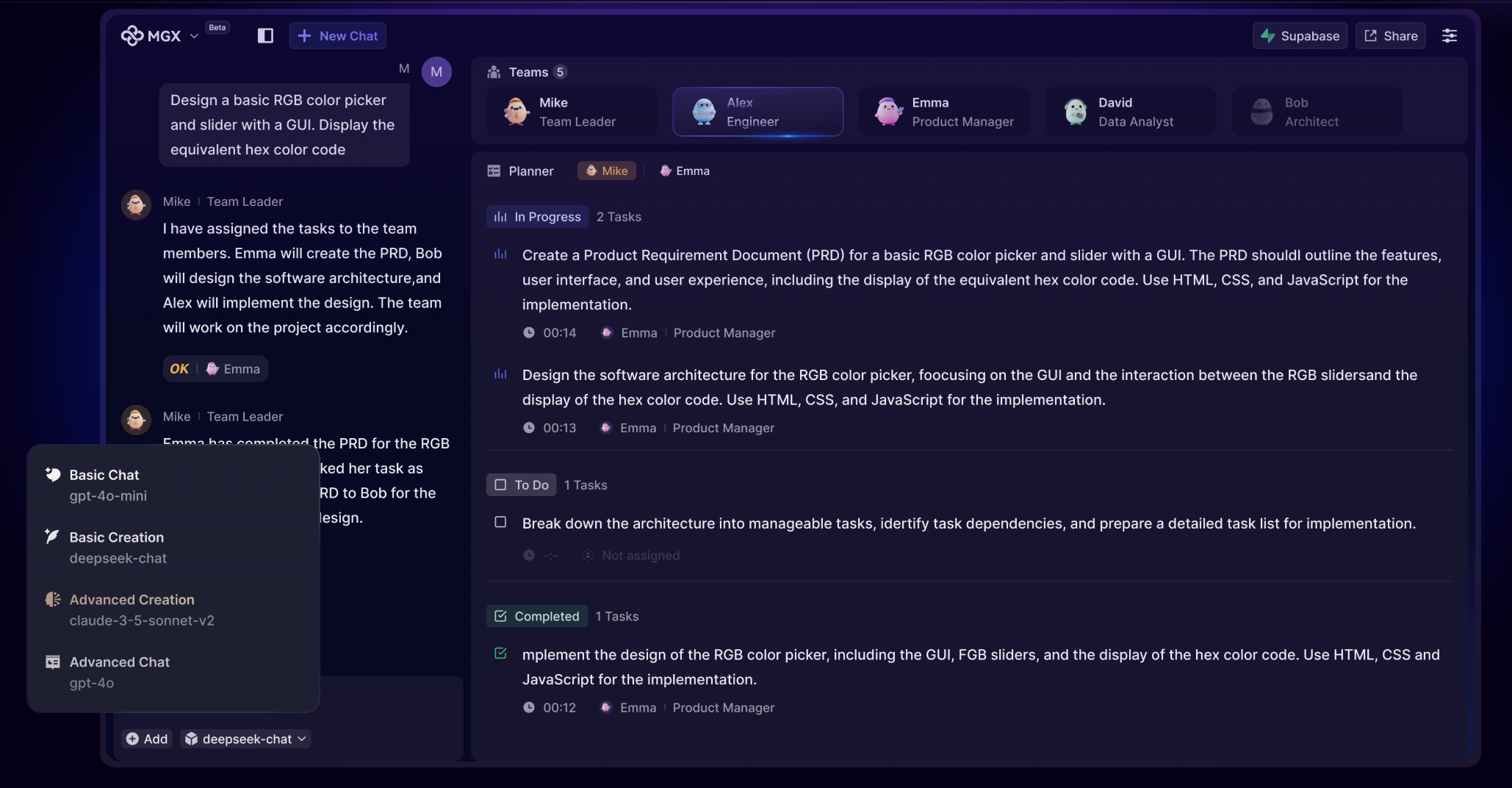

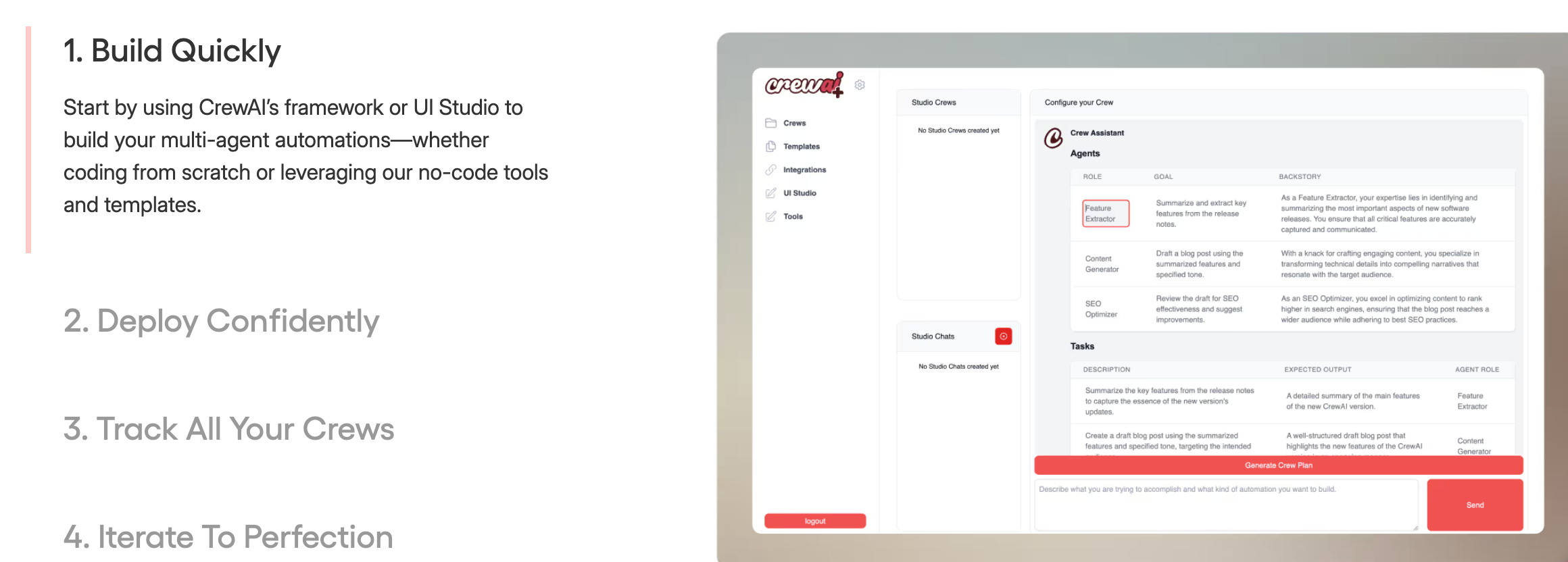

Crew AI

Public reviews: 4.6 ⭐ (Product Hunt, GitHub)

Our rating: 8/10 ⭐

Similar to: Autogen, LangChain

Typical users: AI engineers, product teams building agent workflows

Known for: Flexible, composable agent-to-agent orchestration

Why choose it? Plug-and-play integrations, strong community, rapid workflow prototyping

What is Crew AI?

Crew AI is a multi-agent orchestration platform to compose agent teams with roles, tools, memory, and task handoffs.

Plug-and-play integrations (LLMs, APIs, vector stores, webhooks) make it fast to prototype, test, and ship agent workflows.

Why is Crew AI a top multi-agent orchestration platform?

Compose agent teams with roles, memory, and clean handoffs. Snap in LLMs, APIs, and vector stores to prototype and ship fast.

Crew AI's top features

- Agent roles and task definitions: Define agents with roles, goals, and backstories; specify tasks with instructions, expected outputs, and the agent responsible for each step.

- Orchestration and handoffs: Run workflows in sequential or hierarchical (manager–worker) modes; pass outputs and context between agents automatically to progress multi-step processes.

- Tool and API plugins: Attach built-in tools (e.g., web search/scraping, code execution, file I/O) or register custom Python tools; scope which tools each agent can call and how they’re invoked.

- Memory and knowledge retrieval: Connect vector-based memory to agents; ingest documents and data sources; retrieve relevant snippets into prompts during task execution.

- LLM provider flexibility: Configure different models per agent or task; switch among cloud and local LLMs (e.g., OpenAI, Anthropic, Ollama) through a unified interface.

Pros and cons of Crew AI

Pros: Why do people pick Crew AI over other tools for multi-agent orchestration?

✅ Task orchestration with clean handoffs

Sequential and manager–worker runs auto-pass context, reducing glue code in multi-step agent flows.

✅ Per-agent tool scoping and custom plugins

Grant web, code, file I/O, or Python tools per agent for safer, auditable actions and tighter control.

✅ Per-agent model routing (cloud and local)

Mix OpenAI/Anthropic with Ollama per task, switching backends without refactoring agent code.

Cons: What do people dislike about Crew AI?

❌ Limited observability and debugging

Sparse traces/logging make agent handoffs hard to inspect and debug at scale.

❌ Weak state and retry primitives

No built-in checkpointing or resumable runs; long workflows are brittle vs LangGraph.

❌ Minimal sandboxing for tool execution

Code and web tools run with broad system access; fine-grained permissions are DIY.

Is there data to back Crew AI as the best multi-agent orchestration platform?

4.6/5

avg public rating (Product Hunt; source: producthunt.com)

10k+

GitHub stars for crewAI repo (joaomdmoura/crewAI; source: github.com)

20-40%

higher task success for multi-agent vs single-agent on coding/reasoning benchmarks (AutoGen, CAMEL; sources: arXiv)

5+

LLM providers supported natively (OpenAI, Anthropic, Groq, Cohere, Ollama; source: docs)

0

vendor-neutral, published NPS/latency or conversion-lift studies for orchestration frameworks (as of Oct 2024)

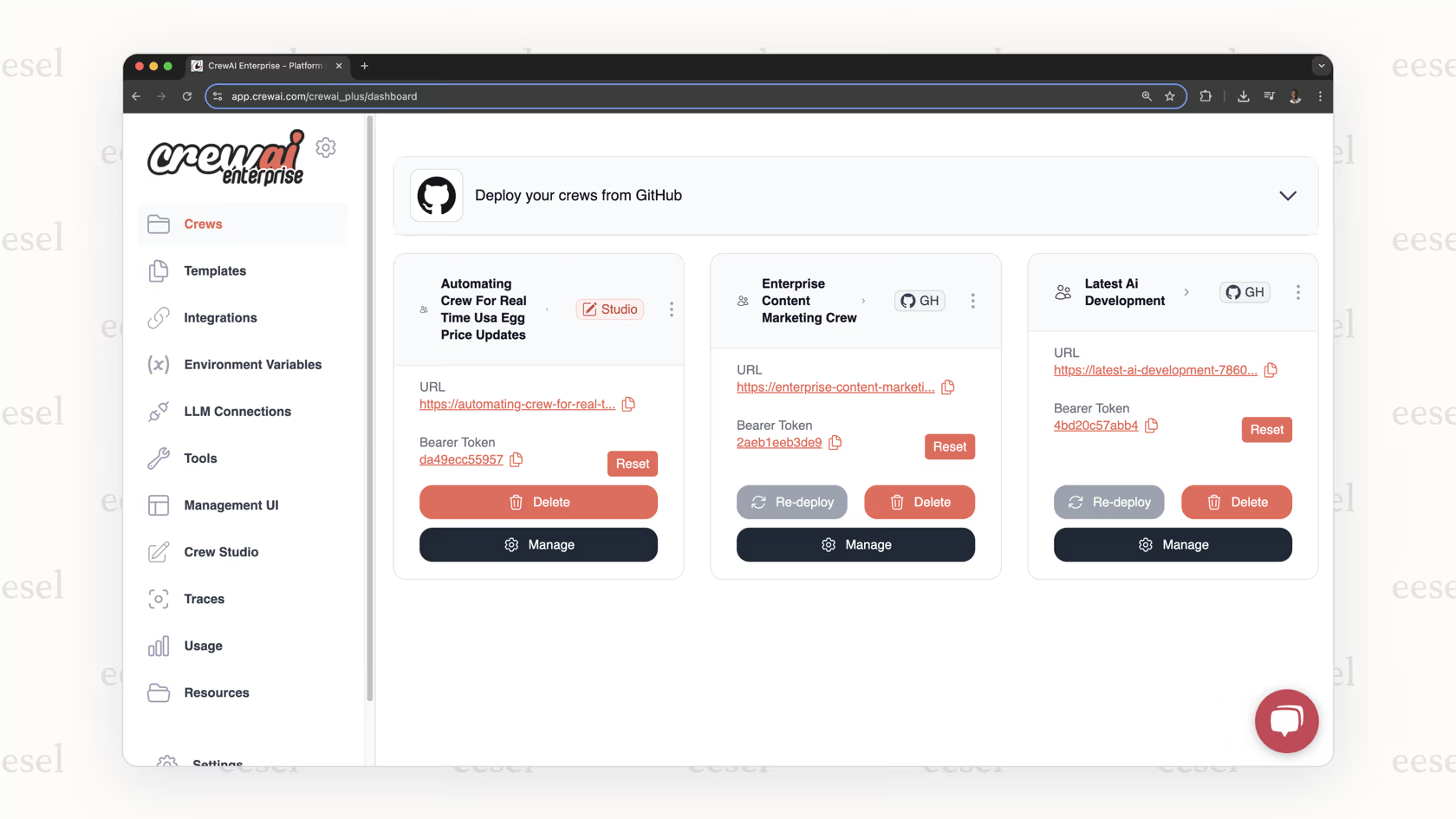

Pricing: How much does Crew AI really cost?

CrewAI no longer publishes pricing publicly.

The numbers below are sourced from customer research, so they reflect what users have reported (not official site listings).

Choose between these 6 plans (as reported):

- Free – $0/month: Ideal for experiments; includes 50 executions/month, 1 live deployed crew, and 1 seat.

- Basic – $99/month: Scales to 100 executions/month, supports 2 live crews, and allows 5 user seats—enables access to the cloud UI and managed infrastructure.

- Standard – $6,000/year (~$500/month equivalent): Offers 1,000 executions/month, 5 live crews, unlimited seats, and includes 2 hours of onboarding plus associate-level support.

- Pro – $12,000/year (~$1,000/month equivalent): Covers 2,000 executions/month, 10 live crews, unlimited seats, 4 onboarding hours, and senior support.

- Enterprise – $60,000/year (~$5,000/month equivalent): Delivers 10,000 executions/month, 50 live crews, unlimited seats, 10 onboarding hours, and senior support team.

- Ultra – $120,000/year (~$10,000/month equivalent): Designed for massive scale—500,000 executions/month, 100 live crews, unlimited seats, 20 onboarding hours, senior-level support, and a dedicated Virtual Private Cloud (VPC).

Price limitations & potential surprises

Pricing is hidden until after signup

CrewAI doesn’t publish pricing upfront. Users need to create a free account just to discover the tiers.

This lack of transparency can hide scope creep until you're already evaluating the tool.

Execution limits drive plan escalation

Every agent workflow, regardless of complexity, counts as one execution. Complex or production-grade agents can quickly exhaust monthly quotas, triggering mandatory upgrades.

Overages aren’t priced transparently. CrewAI nudges you to move to the next tier instead.

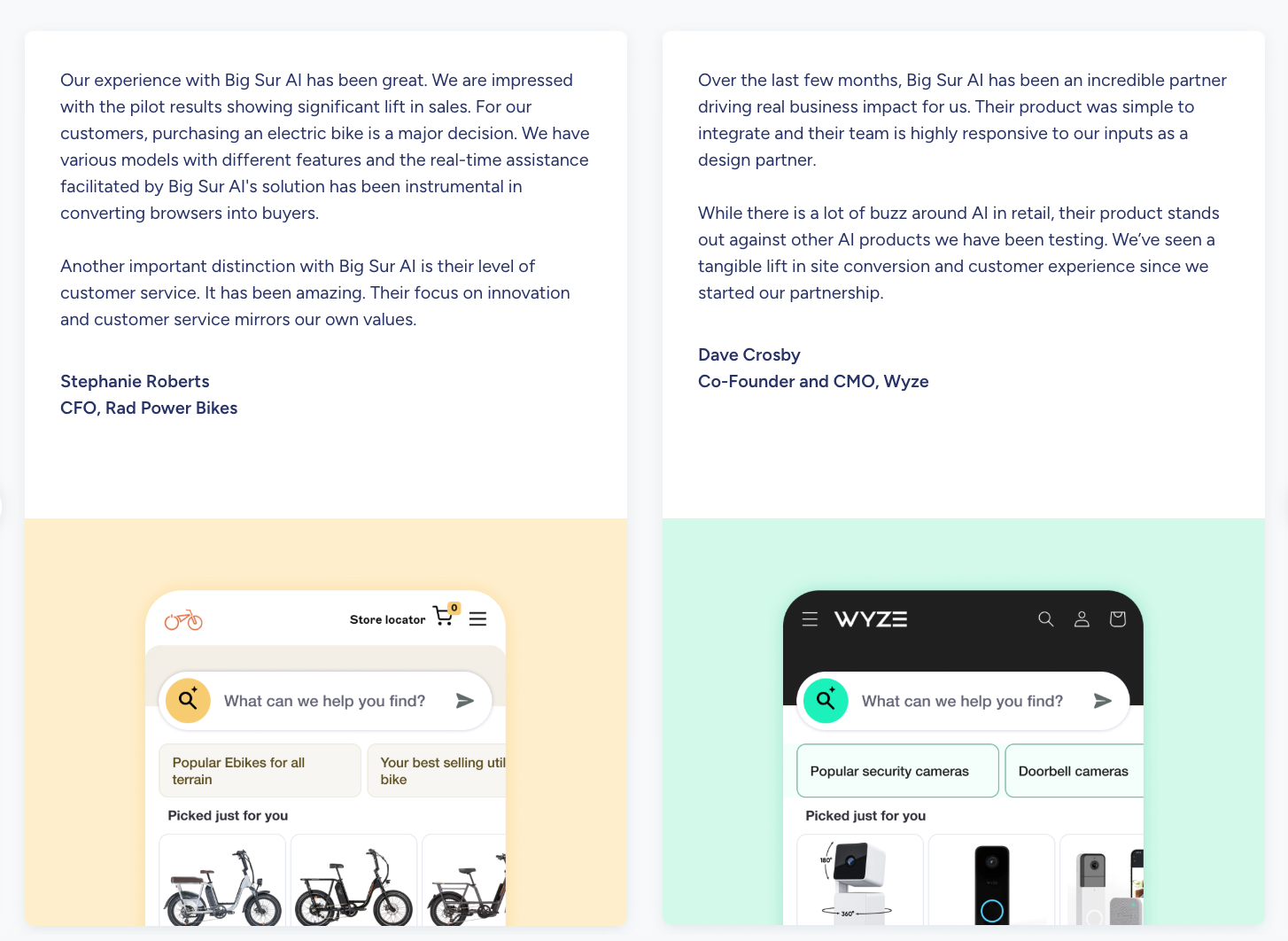

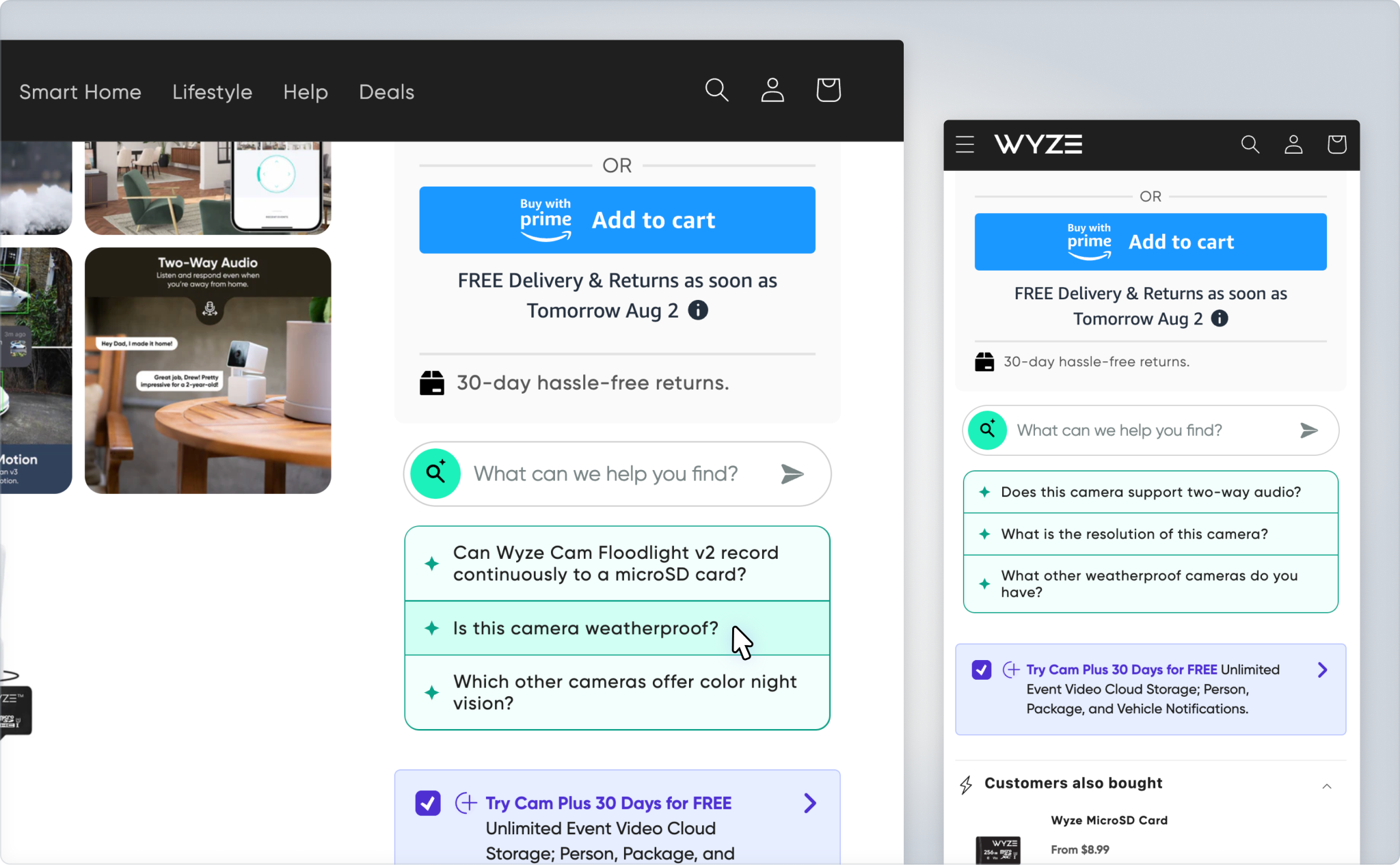

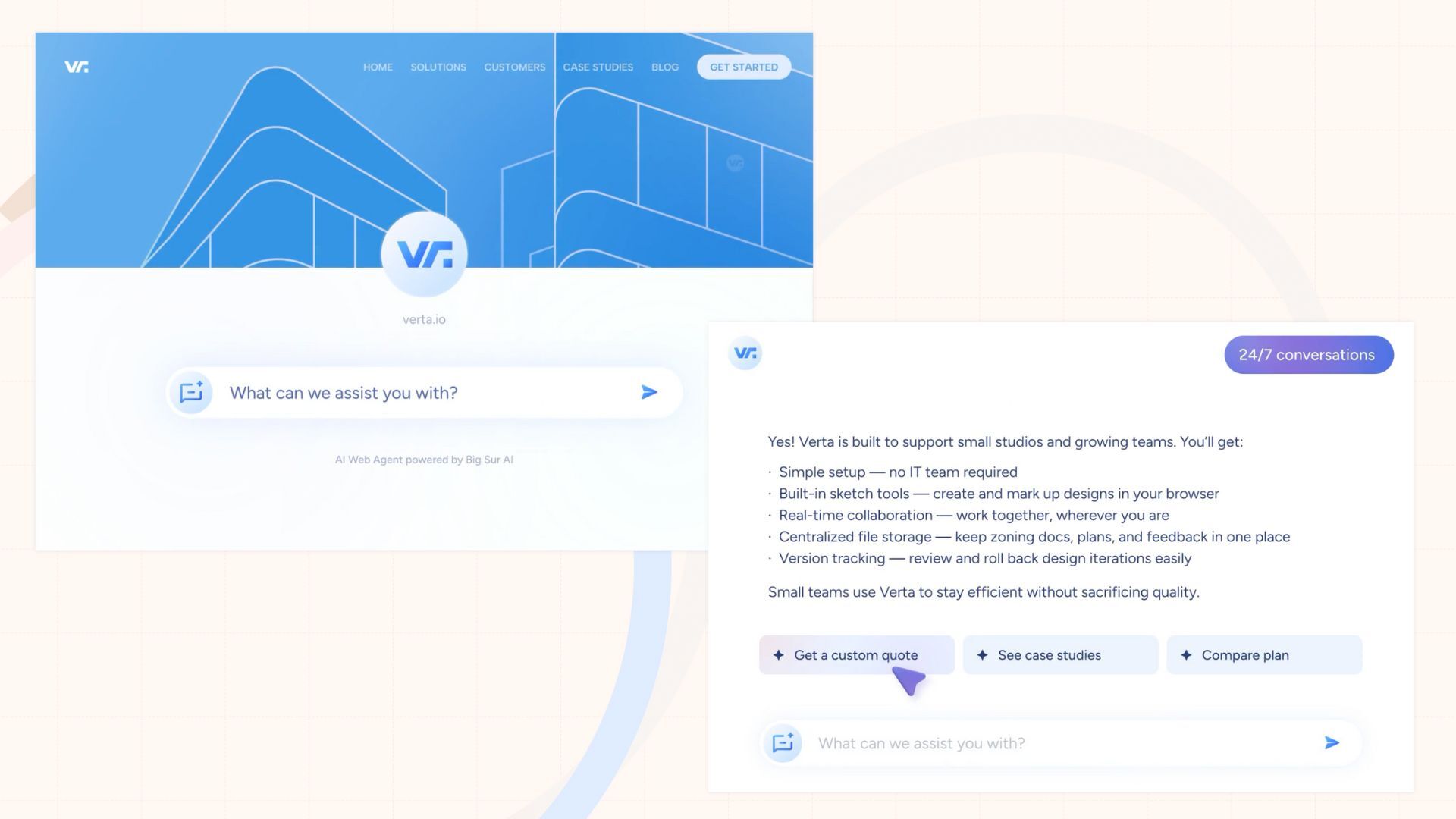

Why consider Big Sur AI for multi-agent orchestration

Deploy pre-built AI agents across sales, marketing, web, and e-commerce with ready-made, conversion-optimized flows and no custom code.

Out-of-the-box agents for revenue and engagement

Big Sur AI offers production-ready agents such as the AI Web Agent (for on-site guidance), AI Sales Agent (for real-time sales conversations), and AI Content Marketer (for automated campaign creation).

They’re pre-built, so non-technical teams don’t need to take the time to build multi-agent clusters from scratch.

Conversion-optimized prompts and adaptive, personalized journeys

Unlike general-purpose orchestration frameworks, Big Sur AI provides a library of tested, conversion-optimized prompt flows and adaptive AI quizzes.

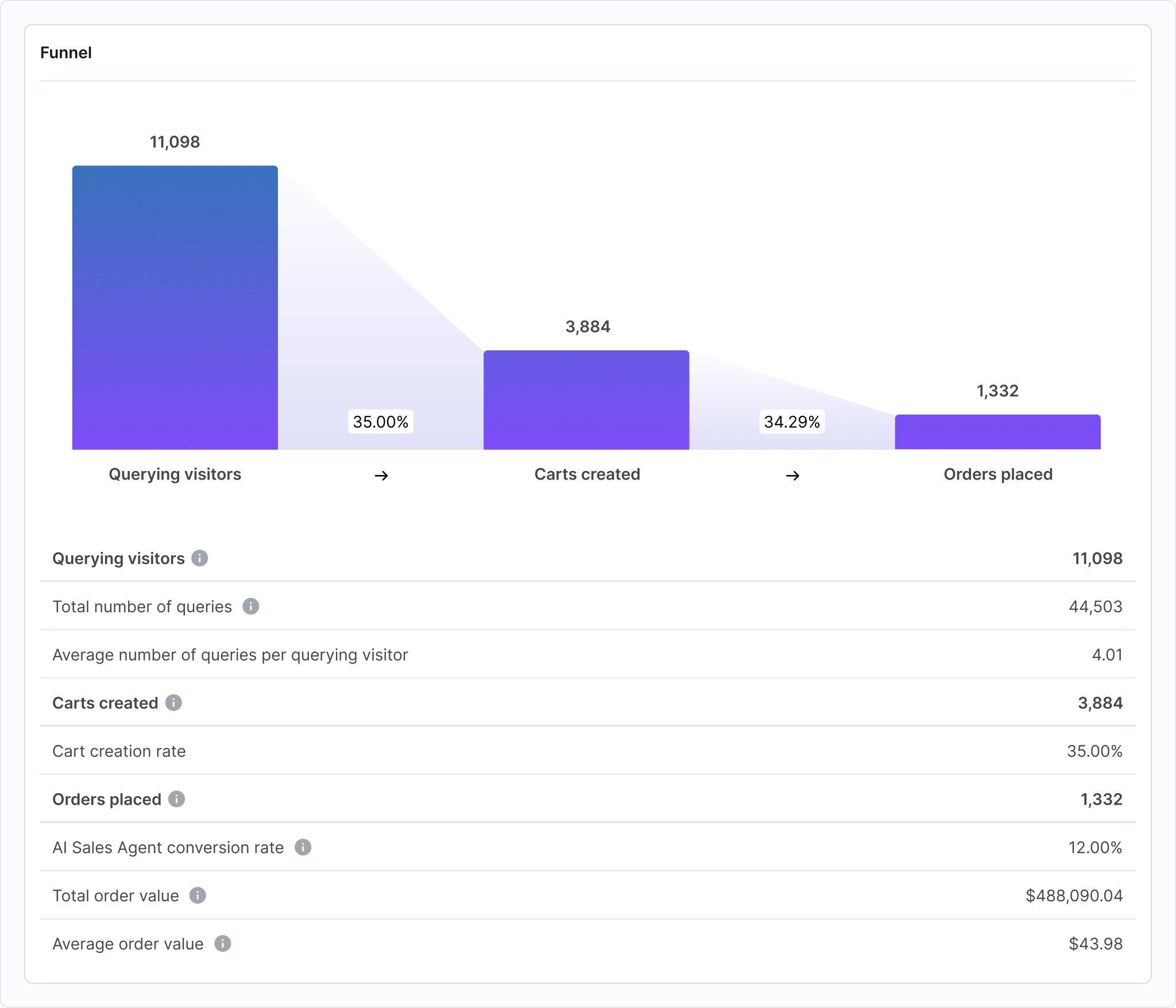

Integrated analytics and actionable merchant insights

Big Sur AI includes built-in analytics and reporting with its Merchant Insights product. Track performance of AI-driven conversations, content recommendations, and quizzes in real time to identify high-converting journeys and optimize your funnel.

Which multi-agent orchestration tool is best for you?

- If you care about deep observability, checkpoints, and flexible graph-based workflows, pick LangGraph.

- If you want production-grade monitoring, role-based routing, and built-in dashboards, Swarm stands out.

- If you need open-source extensibility and advanced chat-style workflows (especially with Azure OpenAI), Microsoft Autogen is your best bet.

- If you value SOP-driven multi-agent pipelines and reproducibility, go with MetaGPT.

- If you want the fastest prototyping with plug-and-play integrations and clean handoffs, check out Crew AI.

Each platform excels at something different. Choose based on your team’s priorities: observability, integration, collaboration style, or setup speed.

Ready to put these ideas into practice? Give Big Sur AI a try and deploy chatbot agents in 10 minutes.